More Spam To Follow

Published 21 years, 1 month past

So… rel="nofollow". Now there’s a way to deny Google juice to things that are linked. Will it stop comment spam? That’s what I first thought, but I’ve come to realize that it’ll very likely make the problem worse. In the last few hours, I’ve been hearing things that support this conclusion.

First, the by-now required disclaimer: I think it’s great that Google is making a foray into link typing, and I don’t think they should reverse course. For that matter, it would be nice if they paid attention to VoteLinks as well, and heck, why not collect XFN values while they’re at it? After all, despite what Bob DuCharme thinks, the rel attribute hasn’t been totally ignored these past twelve years. There is link typing out there, and it’s spreading. Why not allow people to search their network of friends? It’s another small step toward Google Grid… but I digress.

The point is this: rather than discourage comment spammers, nofollow seems likely to encourage them to new depths of activity. Basically, Google’s move validates their approach: by offering bloggers a way to deny Google juice, Google has acknowledged that comment spam is effective. This doesn’t mean the folks at Google are stupid or evil. In their sphere of operation, getting comment spam filtered out of search results is a good thing. It improves their product. The validation provided to spammers is an unfortunate, possibly even unanticipated, side effect.

There is also the possibility, as many have said, that nofollow will harm the Web and Google’s results, because blindly applying a nofollow to every comment-based link will deny Google juice to legitimate, interesting stuff. That might be true if nofollow is used like a sledgehammer, but there are more nuanced solutions aplenty. One is to apply nofollow to links for the first week or two after a comment is posted, and then remove it. As long as any spam is deleted before the end of the probation period, it would be denied Google juice, while legitimate comments and links would eventually get indexed and affect Google’s results (for the better).

In such a case, though, we’re talking about a managed blog—exactly the kind of place where comment spam had the least impact anyway. Sure, occasionally the Googlebot might pick up some spam links before the spam was removed from the site, but in general spam doesn’t survive on managed sites long enough to make that much of a difference.

Like Scoble, where I might find nofollow of use would be if I wanted to link to the site of a group or person I severely disliked in order to support a claim or argument I was making. It would be a small thing, but still useful on a personal level. (I’d probably also vote-against the target of such a link, on the chance that one day indexers other than Technorati‘s would pay attention.)

No matter what, the best defenses against comment spam will be to prevent it from ever appearing in the first place. There are of course a variety of methods to accomplish this, although most of them seem doomed to fail sooner or later. I’m using three layers of defense myself, the outer of which is currently about 99.9% effective in preventing spam from ever hitting the moderation queue, let alone make it onto the site. One day, the layer’s effectiveness will very suddenly drop to zero. The second layer was about 95% effective at catching spam when it was the outer layer, and since it’s content-based will likely stay at that level over time. The final layer is a last-ditch picket line that only works in certain cases, but is quite effective at what it does.

So what are these layers, exactly? I’m not telling. Why not? Because the longer these methods stay off the spammers’ radar, the longer the defenses will be effective. Take that outer layer I talked about a moment ago: I know exactly how it could be completely defeated, and for all time. Think I’m about to explain how? You must be mad.

The only spam-blocking method I can think of that has any long-term hope of effectiveness is the kind that requires a human brain to circumvent. As an example, I might put an extra question on my comment form that says “What is Eric’s first name?” Filling in the right answer gets the post through. (As Matt pointed out to me, Jeremy Zawodny does this, and that’s where I got the idea.) That’s the sort of thing a spambot couldn’t possibly get right unless it was specifically programmed to do so for my site—and there’s no reason why any spammer would bother to program a bot to do so. That would leave only human-driven spam, the kind that’s copy-and-pasted into the comment form by an actual human, and nothing besides having to personally approve every single post will be able to stop that completely.

So, to sum up: it’s cool that Google is getting hip to link typing, even though I don’t think the end result of this particular move is going to be everything we might have hoped. More active forms of spam defense will be needed, both now and in the future, and the best defense of all is active management of your site. Spammers are still filthy little parasites, and ought to be keelhauled. In other words: same as it ever was. Carry on.

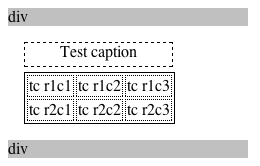

In Safari, you see, the caption’s element box is basically made a part of the table box. It sits, effectively, between the top table border and the top margin. That allows the caption’s width to inherently match the width of the table itself, and causes any top margin given to the table to sit above the caption. Makes sense, right? It certainly did to me.

In Safari, you see, the caption’s element box is basically made a part of the table box. It sits, effectively, between the top table border and the top margin. That allows the caption’s width to inherently match the width of the table itself, and causes any top margin given to the table to sit above the caption. Makes sense, right? It certainly did to me.

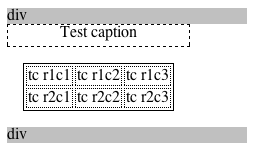

This is the behavior evinced by Firefox 1.0, and as unintuitive as it might be, it’s what the specification demands.

This is the behavior evinced by Firefox 1.0, and as unintuitive as it might be, it’s what the specification demands.