Blinded By the Light DOM

Published 1 year, 8 months pastFor a while now, Web Components (which I’m not going to capitalize again, you’re welcome) have been one of those things that pop up in the general web conversation, seem intriguing, and then fade into the background again.

I freely admit a lot of this experience is due to me, who is not all that thrilled with the Shadow DOM in general and all the shenanigans required to cross from the Light Side to the Dark Side in particular. I like the Light DOM. It’s designed to work together pretty well. This whole high-fantasy-flavored Shadowlands of the DOM thing just doesn’t sit right with me.

If they do for you, that’s great! Rock on with your bad self. I say all this mostly to set the stage for why I only recently had a breakthrough using web components, and now I quite like them. But not the shadow kind. I’m talking about Fully Light-DOM Components here.

It started with a one-two punch: first, I read Jim Nielsen’s “Using Web Components on My Icon Galleries Websites”, which I didn’t really get the first few times I read it, but I could tell there was something new (to me) there. Very shortly thereafter, I saw Dave Rupert’s <fit-vids> CodePen, and that’s when the Light DOM Bulb went off in my head. You just take some normal HTML markup, wrap it with a custom element, and then write some JS to add capabilities which you can then style with regular CSS! Everything’s of the Light Side of the Web. No need to pierce the Vale of Shadows or whatever.

Kindly permit me to illustrate at great length and in some depth, using a thing I created while developing a tool for internal use at Igalia as the basis. Suppose you have some range inputs, just some happy little slider controls on your page, ready to change some values, like this:

<label for="title-size">Title font size</label>

<input id="title-size" type="range" min="0.5" max="4" step="0.1" value="2" />

The idea here is that you use the slider to change the font size of an element of some kind. Using HTML’s built-in attributes for range inputs, I set a minimum, maximum, and initial value, the step size permitted for value changes, and an ID so a <label> can be associated with it. Dirt-standard HTML stuff, in other words. Given that this markup exists in the page, then, it needs to be hooked up to the thing it’s supposed to change.

In Ye Olden Days, you’d need to write a function to go through the entire DOM looking for these controls (maybe you’d add a specific class to the ones you need to find), figure out how to associate them with the element they’re supposed to affect (a title, in this case), add listeners, and so on. It might go something like:

let sliders = document.querySelectorAll('input[id]');

for (i = 0; i < sliders.length; i++) {

let slider = sliders[i];

// …add event listeners

// …target element to control

// …set behaviors, maybe call external functions

// …etc., etc., etc.

}

Then you’d have to stuff all that into a window.onload observer or otherwise defer the script until the document is finished loading.

To be clear, you can absolutely still do it that way. Sometimes, it’s even the most sensible choice! But fully-light-DOM components can make a lot of this easier, more reusable, and robust. We can add some custom elements to the page and use those as a foundation for scripting advanced behavior.

Now, if you’re like me (and I know I am), you might think of converting everything into a completely bespoke element and then forcing all the things you want to do with it into its attributes, like this:

<super-slider type="range" min="0.5" max="4" step="0.1" value="2"

unit="em" target=".preview h1">

Title font size

</super-slider>

Don’t do this. If you do, then you end up having to reconstruct the HTML you want to exist out of the data you stuck on the custom element. As in, you have to read off the type, min, max, step, and value attributes of the <super-slider> element, then create an <input> element and add the attributes and their values you just read off <super-slider>, create a <label> and insert the <super-slider>’s text content into the label’s text content, and why? Why did I do this to myse — uh, I mean, why do this to yourself?

Do this instead:

<super-slider unit="em" target=".preview h1">

<label for="title-size">Title font size</label>

<input id="title-size" type="range" min="0.5" max="4" step="0.1" value="2" />

</super-slider>

This is the pattern I got from <fit-vids>, and the moment that really broke down the barrier I’d had to understanding what makes web components so valuable. By taking this approach, you get everything HTML gives you with the <label> and <input> elements for free, and you can add things on top of it. It’s pure progressive enhancement.

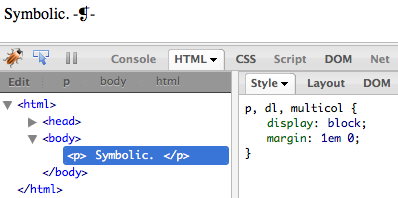

To figure out how all this goes together, I found MDN’s page “Using custom elements” really quite valuable. That’s where I internalized the reality that instead of having to scrape the DOM for custom elements and then run through a loop, I could extend HTML itself:

class superSlider extends HTMLElement {

connectedCallback() {

//

// the magic happens here!

//

}

}

customElements.define("super-slider",superSlider);

What that last line does is tell the browser, “any <super-slider> element is of the superSlider JavaScript class”. Which means, any time the browser sees <super-slider>, it does the stuff that’s defined by class superSlider in the script. Which is the thing in the previous code block! So let’s talk about how it works, with concrete examples.

It’s the class structure that holds the real power. Inside there, connectedCallback() is invoked whenever a <super-slider> is connected; that is, whenever one is encountered in the page by the browser as it parses the markup, or when one is added to the page later on. It’s an auto-startup callback. (What’s a callback? I’ve never truly understood that, but it turns out I don’t have to!) So in there, I write something like:

connectedCallback() {

let targetEl = document.querySelector(this.getAttribute('target'));

let unit = this.getAttribute('unit');

let slider = this.querySelector('input[type="range"]');

}

So far, all I’ve done here is:

- Used the value of the

targetattribute on<super-slider>to find the element that the range slider should affect using a CSS-esque query. - The

unitattribute’s value to know what CSS unit I’ll be using later in the code. - Grabbed the range input itself by running a

querySelector()within the<super-slider>element.

With all those things defined, I can add an event listener to the range input:

slider.addEventListener("input",(e) => {

let value = slider.value + unit;

targetEl.style.setProperty('font-size',value);

});

…and really, that’s it. Put all together:

class superSlider extends HTMLElement {

connectedCallback() {

let targetEl = document.querySelector(this.getAttribute('target'));

let unit = this.getAttribute('unit');

let slider = this.querySelector('input[type="range"]');

slider.addEventListener("input",(e) => {

targetEl.style.setProperty('font-size',slider.value + unit);

});

}

}

customElements.define("super-slider",superSlider);

You can see it in action with this CodePen.

<span>See the Pen <a href="https://codepen.io/meyerweb/pen/oNmXJRX">

WebCOLD 01</a> by Eric A. Meyer (<a href="https://codepen.io/meyerweb">@meyerweb</a>)

on <a href="https://codepen.io">CodePen</a>.</span>

As I said earlier, you can get to essentially the same result by running document.querySelectorAll('super-slider') and then looping through the collection to find all the bits and bobs and add the event listeners and so on. In a sense, that’s what I’ve done above, except I didn’t have to do the scraping and looping and waiting until the document has loaded — using web components abstracts all of that away. I’m also registering all the components with the browser via customElements.define(), so there’s that too. Overall, somehow, it just feels cleaner.

One thing that sets customElements.define() apart from the collect-and-loop-after-page-load approach is that custom elements fire all that connection callback code on themselves whenever they’re added to the document, all nice and encapsulated. Imagine for a moment an application where custom elements are added well after page load, perhaps as the result of user input. No problem! There isn’t the need to repeat the collect-and-loop code, which would likely have to have special handling to figure out which are the new elements and which already existed. It’s incredibly handy and much easier to work with.

But that’s not all! Suppose we want to add a “reset” button — a control that lets you set the slider back to its starting value. Adding some code to the connectedCallback() can make that happen. There’s probably a bunch of different ways to do this, so what follows likely isn’t the most clever or re-usable way. It is, instead, the way that made sense to me at the time.

let reset = slider.getAttribute('value');

let resetter = document.createElement('button');

resetter.textContent = '↺';

resetter.setAttribute('title', reset + unit);

resetter.addEventListener("click",(e) => {

slider.value = reset;

slider.dispatchEvent(

new MouseEvent('input', {view: window, bubbles: false})

);

});

slider.after(resetter);

With that code added into the connection callback, a button gets added right after the slider, and it shows a little circle-arrow to convey the concept of resetting. You could just as easily make its text “Reset”. When said button is clicked or keyboard-activated ("click" handles both, it seems), the slider is reset to the stored initial value, and then an input event is fired at the slider so the target element’s style will also be updated. This is probably an ugly, ugly way to do this! I did it anyway.

<span>See the Pen <a href="https://codepen.io/meyerweb/pen/jOdPdyQ">

WebCOLD 02</a> by Eric A. Meyer (<a href="https://codepen.io/meyerweb">@meyerweb</a>)

on <a href="https://codepen.io">CodePen</a>.</span>

Okay, so now that I can reset the value, maybe I’d also like to see what the value is, at any given moment in time? Say, by inserting a classed <span> right after the label and making its text content show the current combination of value and unit?

let label = this.querySelector('label');

let readout = document.createElement('span');

readout.classList.add('readout');

readout.textContent = slider.value + unit;

label.after(readout);

Plus, I’ll need to add the same text content update thing to the slider’s handling of input events:

slider.addEventListener("input", (e) => {

targetEl.style.setProperty("font-size", slider.value + unit);

readout.textContent = slider.value + unit;

});

I imagine I could have made this readout-updating thing a little more generic (less DRY, if you like) by creating some kind of getter/setter things on the JS class, which is totally possible to do, but that felt like a little much for this particular situation. Or I could have broken the readout update into its own function, either within the class or external to it, and passed in the readout and slider and reset value and unit to cause the update. That seems awfully clumsy, though. Maybe figuring out how to make the span a thing that observes slider changes and updates automatically? I dunno, just writing the same thing in two places seemed a lot easier, so that’s how I did it.

So, at this point, here’s the entirety of the script, with a CodePen example of the same thing immediately after.

class superSlider extends HTMLElement {

connectedCallback() {

let targetEl = document.querySelector(this.getAttribute("target"));

let unit = this.getAttribute("unit");

let slider = this.querySelector('input[type="range"]');

slider.addEventListener("input", (e) => {

targetEl.style.setProperty("font-size", slider.value + unit);

readout.textContent = slider.value + unit;

});

let reset = slider.getAttribute("value");

let resetter = document.createElement("button");

resetter.textContent = "↺";

resetter.setAttribute("title", reset + unit);

resetter.addEventListener("click", (e) => {

slider.value = reset;

slider.dispatchEvent(

new MouseEvent("input", { view: window, bubbles: false })

);

});

slider.after(resetter);

let label = this.querySelector("label");

let readout = document.createElement("span");

readout.classList.add("readout");

readout.textContent = slider.value + unit;

label.after(readout);

}

}

customElements.define("super-slider", superSlider);

<span>See the Pen <a href="https://codepen.io/meyerweb/pen/NWoGbWX">

WebCOLD 03</a> by Eric A. Meyer (<a href="https://codepen.io/meyerweb">@meyerweb</a>)

on <a href="https://codepen.io">CodePen</a>.</span>

Anything you can imagine JS would let you do to the HTML and CSS, you can do in here. Add a class to the slider when it has a value other than its default value so you can style the reset button to fade in or be given a red outline, for example.

Or maybe do what I did, and add some structural-fix-up code. For example, suppose I were to write:

<super-slider unit="em" target=".preview h2">

<label>Subtitle font size</label>

<input type="range" min="0.5" max="2.5" step="0.1" value="1.5" />

</super-slider>

In that bit of markup, I left off the id on the <input> and the for on the <label>, which means they have no structural association with each other. (You should never do this, but sometimes it happens.) To handle this sort of failing, I threw some code into the connection callback to detect and fix those kinds of authoring errors, because why not? It goes a little something like this:

if (!label.getAttribute('for') && slider.getAttribute('id')) {

label.setAttribute('for',slider.getAttribute('id'));

}

if (label.getAttribute('for') && !slider.getAttribute('id')) {

slider.setAttribute('id',label.getAttribute('for'));

}

if (!label.getAttribute('for') && !slider.getAttribute('id')) {

let connector = label.textContent.replace(' ','_');

label.setAttribute('for',connector);

slider.setAttribute('id',connector);

}

Once more, this is probably the ugliest way to do this in JS, but also again, it works. Now I’m making sure labels and inputs have association even when the author forgot to explicitly define it, which I count as a win. If I were feeling particularly spicy, I’d have the code pop an alert chastising me for screwing up, so that I’d fix it instead of being a lazy author.

It also occurs to me, as I review this for publication, that I didn’t try to do anything in situations where both the for and id attributes are present, but their values don’t match. That feels like something I should auto-fix, since I can’t imagine a scenario where they would need to intentionally be different. It’s possible my imagination is lacking, of course.

So now, here’s all just-over-40 lines of the script that makes all this work, followed by a CodePen demonstrating it.

class superSlider extends HTMLElement {

connectedCallback() {

let targetEl = document.querySelector(this.getAttribute("target"));

let unit = this.getAttribute("unit");

let slider = this.querySelector('input[type="range"]');

slider.addEventListener("input", (e) => {

targetEl.style.setProperty("font-size", slider.value + unit);

readout.textContent = slider.value + unit;

});

let reset = slider.getAttribute("value");

let resetter = document.createElement("button");

resetter.textContent = "↺";

resetter.setAttribute("title", reset + unit);

resetter.addEventListener("click", (e) => {

slider.value = reset;

slider.dispatchEvent(

new MouseEvent("input", { view: window, bubbles: false })

);

});

slider.after(resetter);

let label = this.querySelector("label");

let readout = document.createElement("span");

readout.classList.add("readout");

readout.textContent = slider.value + unit;

label.after(readout);

if (!label.getAttribute("for") && slider.getAttribute("id")) {

label.setAttribute("for", slider.getAttribute("id"));

}

if (label.getAttribute("for") && !slider.getAttribute("id")) {

slider.setAttribute("id", label.getAttribute("for"));

}

if (!label.getAttribute("for") && !slider.getAttribute("id")) {

let connector = label.textContent.replace(" ", "_");

label.setAttribute("for", connector);

slider.setAttribute("id", connector);

}

}

}

customElements.define("super-slider", superSlider);

<span>See the Pen <a href="https://codepen.io/meyerweb/pen/PoVPbzK">

WebCOLD 04</a> by Eric A. Meyer (<a href="https://codepen.io/meyerweb">@meyerweb</a>)

on <a href="https://codepen.io">CodePen</a>.</span>

There are doubtless cleaner/more elegant/more clever ways to do pretty much everything I did above, considering I’m not much better than an experienced amateur when it comes to JavaScript. Don’t focus so much on the specifics of what I wrote, and more on the overall concepts at play.

I will say that I ended up using this custom element to affect more than just font sizes. In some places I wanted to alter margins; in others, the hue angle of colors. There are a couple of ways to do this. The first is what I did, which is to use a bunch of CSS variables and change their values. So the markup and relevant bits of the JS looked more like this:

<super-slider unit="em" variable="titleSize">

<label for="title-size">Title font size</label>

<input id="title-size" type="range" min="0.5" max="4" step="0.1" value="2" />

</super-slider>

let cssvar = this.getAttribute("variable");

let section = this.closest('section');

slider.addEventListener("input", (e) => {

section.style.setProperty(`--${cssvar}`, slider.value + unit);

readout.textContent = slider.value + unit;

});

The other way (that I can think of) would be to declare the target element’s selector and the property you want to alter, like this:

<super-slider unit="em" target=".preview h1" property="font-size">

<label for="title-size">Title font size</label>

<input id="title-size" type="range" min="0.5" max="4" step="0.1" value="2" />

</super-slider>

I’ll leave the associated JS as an exercise for the reader. I can think of reasons to do either of those approaches.

But wait! There’s more! Not more in-depth JS coding (even though we could absolutely keep going, and in the tool I built, I absolutely did), but there are some things to talk about before wrapping up.

First, if you need to invoke the class’s constructor for whatever reason — I’m sure there are reasons, whatever they may be — you have to do it with a super() up top. Why? I don’t know. Why would you need to? I don’t know. If I read the intro to the super page correctly, I think it has something to do with class prototypes, but the rest went so far over my head the FAA issued a NOTAM. Apparently I didn’t do anything that depends on the constructor in this article, so I didn’t bother including it.

Second, basically all the JS I wrote in this article went into the connectedCallback() structure. This is only one of four built-in callbacks! The others are:

-

disconnectedCallback(), which is fired whenever a custom element of this type is removed from the page. This seems useful if you have things that can be added or subtracted dynamically, and you want to update other parts of the DOM when they’re subtracted. -

adoptedCallback(), which is (to quote MDN) “called each time the element is moved to a new document.” I have no idea what that means. I understand all the words; it’s just that particular combination of them that confuses me. -

attributeChangedCallback(), which is fired when attributes of the custom element change. I thought about trying to use this for my super-sliders, but in the end, nothing I was doing made sense (to me) to bubble up to the custom element just to monitor and act upon. A use case that does suggest itself: if I allowed users to change the sizing unit, say fromemtovh, I’d want to change other things, like themin,max,step, and defaultvalueattributes of the sliders. So, since I’d have to change the value of theunitattribute anyway, it might make sense to useattributeChangedCallback()to watch for that sort of thing and then take action. Maybe!

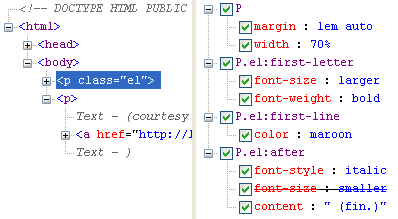

Third, I didn’t really talk about styling any of this. Well, because all of this stuff is in the Light DOM, I don’t have to worry about Shadow Walls or whatever, I can style everything the normal way. Here’s a part of the CSS I use in the CodePens, just to make things look a little nicer:

super-slider {

display: flex;

align-items: center;

margin-block: 1em;

}

super-slider input[type="range"] {

margin-inline: 0.25em 1px;

}

super-slider .readout {

width: 3em;

margin-inline: 0.25em;

padding-inline: 0.5em;

border: 1px solid #0003;

background: #EEE;

font: 1em monospace;

text-align: center;

}

Hopefully that all makes sense, but if not, let me know in the comments and I’ll clarify.

A thing I didn’t do was use the :defined pseudo-class to style custom elements that are defined, or rather, to style those that are not defined. Remember the last line of the script, where customElements.define() is called to define the custom elements? Because they are defined that way, I could add some CSS like this:

super-slider:not(:defined) {

display: none;

}

In other words, if a <super-slider> for some reason isn’t defined, make it and everything inside it just… go away. Once it becomes defined, the selector will no longer match, and the display: none will be peeled away. You could use visibility or opacity instead of display; really, it’s up to you. Heck, you could tile red warning icons in the whole background of the custom element if it hasn’t been defined yet, just to drive the point home.

The beauty of all this is, you don’t have to mess with Shadow DOM selectors like ::part() or ::slotted(). You can just style elements the way you always style them, whether they’re built into HTML or special hyphenated elements you made up for your situation and then, like the Boiling Isles’ most powerful witch, called into being.

That said, there’s a “fourth” here, which is that Shadow DOM does offer one very powerful capability that fully Light DOM custom elements lack: the ability to create a structural template with <slot> elements, and then drop your Light-DOM elements into those slots. This slotting ability does make Shadowy web components a lot more robust and easier to share around, because as long as the slot names stay the same, the template can be changed without breaking anything. This is a level of robustness that the approach I explored above lacks, and it’s built in. It’s the one thing I actually do like about Shadow DOM.

It’s true that in a case like I’ve written about here, that’s not a huge issue: I was quickly building a web component for a single tool that I could re-use within the context of that tool. It works fine in that context. It isn’t portable, in the sense of being a thing I could turn into an npm package for others to use, or probably even share around my organization for other teams to use. But then, I only put 40-50 lines worth of coding into it, and was able to rapidly iterate to create something that met my needs perfectly. I’m a lot more inclined to take this approach in the future, when the need arises, which will be a very powerful addition to my web development toolbox.

I’d love to see the templating/slotting capabilities of Shadow DOM brought into the fully Light-DOM component world. Maybe that’s what Declarative Shadow DOM is? Or maybe not! My eyes still go cross-glazed whenever I try to read articles about Shadow DOM, almost like a trickster demon lurking in the shadows casts a Spell of Confusion at me.

So there you have it: a few thousand words on my journey through coming to understand and work with these fully-Light-DOM web components, otherwise known as custom elements. Now all they need is a catchy name, so we can draw more people to the Light Side of the Web. If you have any ideas, please drop ’em in the comments!