Styling a ‘pre’ That Contains a ‘code’

Published 3 years, 2 months pastI’ve just committed my first :has() selector to production CSS and want to share it, so I will! But first, a little context that will feel familiar to nerds like me who post snippets of computer code to the Web, and have markup with this kind of tree structure (not raw source; we’d never allow this much formatting whitespace inside a <pre>):

<pre>

<code>

{{content goes here}}

</code>

</pre>It’s nicely semantic, indicating that the contents of the <pre> are in fact code of some kind, as opposed to just plain preformatted text like, say, output from a shell script or npm job, which isn’t code and thus should, perhaps, be styled in a distinct way.

Given cases like that, you’ve probably written rules that go a little something like this:

pre > code {

display: block;

background: #f1f1f1;

padding: 1.33em;

border-radius: 0.33em;

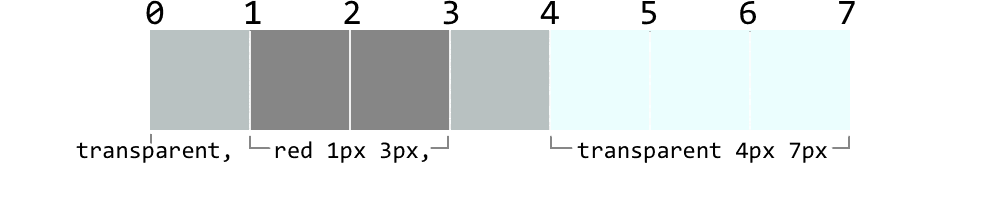

}Which says: if a <code> element is the child of a <pre> element, then turn the <code> into a block box and give it some background color, padding, etc.

It works out fine, but it always feels a little fragile. What if there are already <pre> styles that throw off the intended effect on code blocks? Do we get into specificity wars between those rules and the code-block rules? Find other ways to figure out what should be adjusted in which cases? Those are probably manageable problems, but it would be better not to have them.

It’s also, when you step back for a moment, a little weird. The <pre> is already a block box and the container for the code; why aren’t we styling that? Because unless you wrote some scripting, whether server-side or client-side, to add a class to the <pre> in scenarios like this, there wasn’t a way to address it directly based on its structural contents.

There is now:

pre:has(> code) {

background: #f1f1f1;

padding: 1.33em;

border-radius: 0.33em;

}Now I’m styling any <pre> that has a <code> as a child, which is why I took out the display: block. I don’t need it any more!

But suppose you have a framework or ancient script or something that inserts classed <span> elements between the <pre> and the <code>, like

this:

<pre>

<span class="pref">

<code>

{{content goes here}}

</code>

</span>

</pre>First of all, ew, address the root problem here if at all possible. But if that isn’t possible for whatever reason, you can still style the <pre> based on the presence of a <code> by removing the child combinator from the selector. In other words:

pre:has(code) {

background: #f1f1f1;

padding: 1.33em;

border-radius: 0.33em;

}Now I’m styling any <pre> that has a <code> as a descendant — child, grandchild, great-great-great-great grandchild, whatever.

Which is not only more robust, it’s a lot more future-proof: even if some hot new front-end framework that sticks in <span> elements or something gets added to the site next year, this style will just keep chugging along, styling <pre> elements that contain <code> elements until long after that hot new framework has cooled to ash and been chucked into the bit-bucket.

There is one thing to keep in mind here, as pointed out by Emmanuel over on Mastodon: if you have a scenario where <pre> elements can contain child text nodes in addition to <code> blocks, the <pre> will still be styled in its entirely. Consider:

<pre>

{{some text is here}}

<code>

{{content goes here}}

</code>

{{or text is here}}

</pre>pre:has(> code) and pre:has(code) will still match the <pre> element here, which means all of the text (both inside and outside the <code> elements) will sit inside the light-gray box with the rounded corners. If that’s fine for your use case, great! If not, then don’t use :has() in this scenario, and stick with the pre > code {…} or pre code {…} approach of yore. That will style just the <code> elements instead of the whole <pre>, as in the example at the beginning of this article.

As I write this, the code hasn’t gone into production on wpewebkit.org yet, but I think it will within the next week or two, and will be my first wide-production use of :has(). I feel like it’s a great way to close out 2022 and kick off 2023, because I am that kind of nerd. If you are too, I hope you enjoyed this quick dive into the world of :has().