From ABC’s to 9999999

Published 2 years, 10 months pastThe other week I crossed a midpoint, of sorts: as I was driving home from a weekly commitment, my iPhone segued from Rush’s “Mystic Rhythms” to The Seatbelts’ “N.Y. Rush”, which is, lexicographically speaking, the middle of my iTu — oh excuse me, the middle of my Music Dot App Library, where I passed from the “M” songs into the “N” songs.

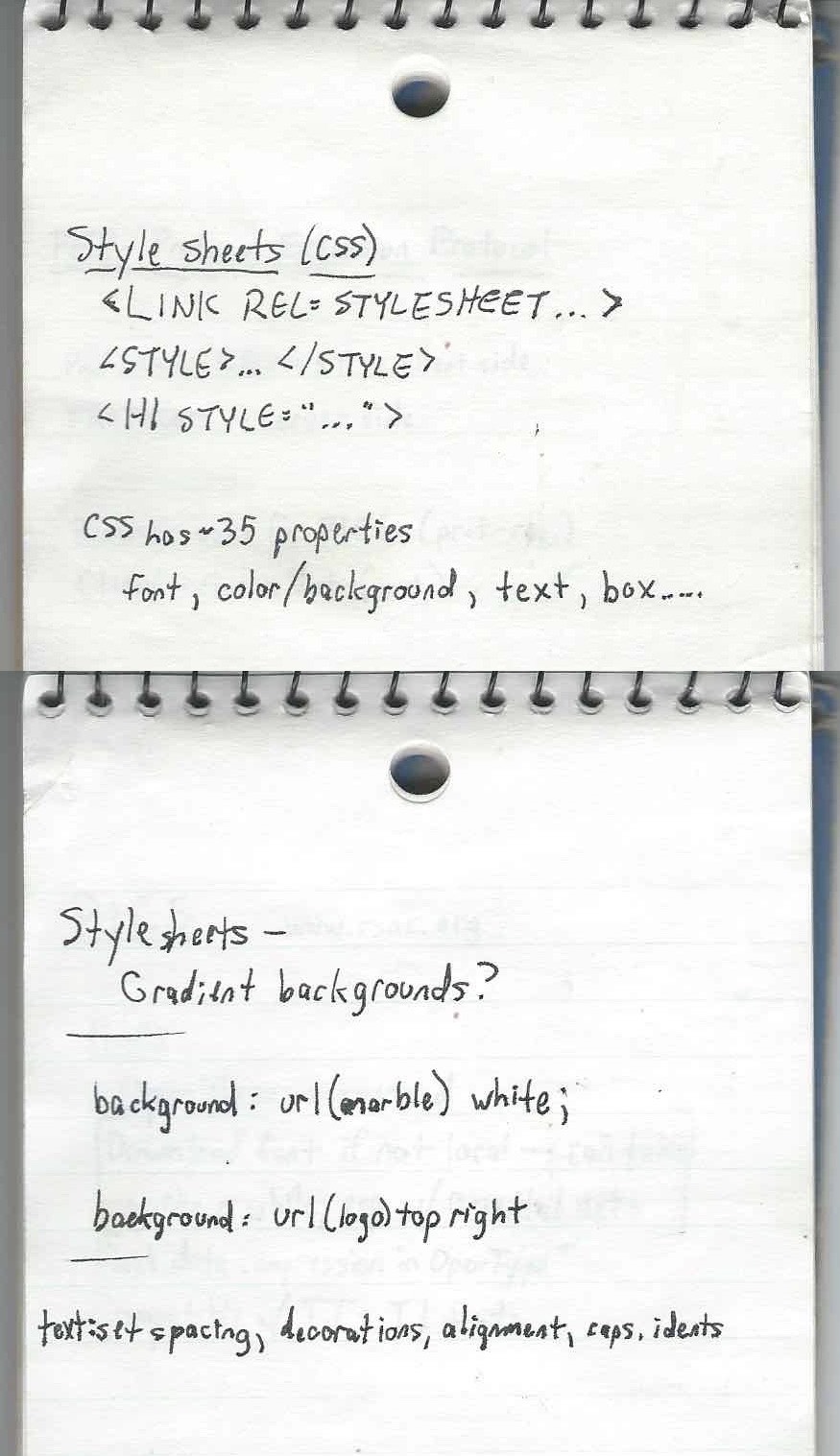

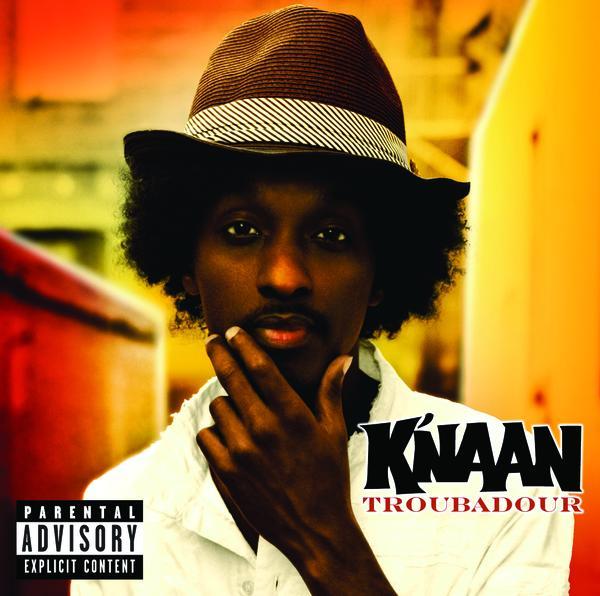

See, about a year or so ago, I took inspiration from Kevin Smokler to set about listening through my entire music library alphabetically by song title. Thus, I started with “ABC’s” by K’naan and will end, probably in a year or so, with “9999999” by Mike Morasky (a.k.a Aperture Science Psychoacoustics Laboratory).

Every time I have to drive my car for more than a few minutes, I’ll plug in my iPhone and continue the listen from where I left off. This mainly happens during the aforementioned weekly commitment, which usually sees me driving for an hour or so. I also listen to it while I’m doing chores around the house like installing ceiling fans or diagnosing half-dead Christmas light strings.

This sort of listen is, in many ways, like listening to the entire library on shuffle, because, as Jared Spool used to point out (and probably still does), alphabetically sorting a long list of things is indistinguishable from having it randomized. For me, the main difference between alphabetical and random is that it’s a lot easier to pick back up where you left off when working through alphabetically. (Yes, Music Dot App should do that automatically, but sometimes it forgets where it was.) You can also be a lot more certain that every song gets a listen, something that’s harder to ensure if you’re listening to a random shuffle of a couple thousand tracks and your software loses its place.

There are other advantages: sometimes, artists will use the same song title, and you get interesting combinations. For example, there was “America”, which gave me a song by K’naan and then a same-titled, but very different, song by Spinal Tap. Similarly, there are titular combinations that pop out, like “Come On” by The Goo Goo Dolls, “Come On In, The Dreams Are Fine” by Dee-Lite, and “Come On Over” by Elana Stone.

Some of these combinations groove, some delight, some earn the stank face, and some make me literally laugh out loud. And some aren’t related by title but still go together really, really well. A recent example was the segue from The Prodigy’s “Narayan” to Radiohead’s “The National Anthem”, which sonically flowed just right at the switchover, almost like they’d been composed to have that effect. It made this old long-ago radio DJ smile.

I say I took inspiration from Kevin because my listen has a couple of differences to his:

- Kevin has a “no skips, ever” rule, but I will skip songs that are repeats. This happens a lot when you have both live and studio albums, as I do for a few artists (particularly Rush), or have copied tracks for lightly-engineered playlists, as I have a few times. That said, if I have a song by one artist and a cover of that song by another, I don’t skip either of them. For remixes or alternate recordings of a song by the same artist, I generally don’t skip, unless the remix is just the original song with a vaguely different beat track.

- I filtered out most of my classical content before starting. This is not because I dislike classical, but because they tend to sort together in unrelenting clumps — all of Beethoven’s and Mozart’s symphonies one after another after another, for example — and I wanted a varietal mix. I did keep “classical” albums like Carreras Domingo Pavarotti in Concert and Carmina Burana because they have normal-length tracks with titles that scatter them throughout the sort. The same reasoning was used to retain classic film and TV scores, even if I was stretching it a bit to leave in The Music of Cosmos (the 1980 one), which prefixes all its tracks with Roman numerals… but each track is a medley, so it got a pass. The whole-album-in-a-single-MP3 The Music of Osmos, on the other hand, did not.

All that said, I have a much shorter road than Kevin: he has a library of over twelve thousand tracks, whereas my slightly-filtered library is just shy of 2,500 tracks, or right around 160 hours. The repeated-song skips knock the total time down a bit, probably by a few hours but not much more than that. So, figure at an average of 80 minutes per week, that’s about 120 weeks, or two years and four months to get from beginning to end.

And what will I do when I reach the end? Probably go back to better curate the sorting (e.g., configuring Soundgarden’s “4th of July” to be sorted as “Fourth of July”), create a playlist that cuts out the repeats ahead of time, and start over. But we’ll see when I get there. Maybe next time I’ll listen to it in reverse alphabetical order instead.